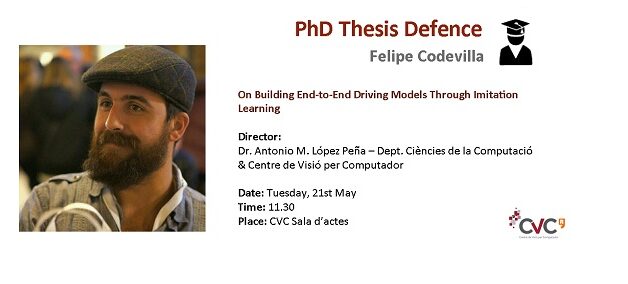

CVC has a new PhD on its record!

Abstract:

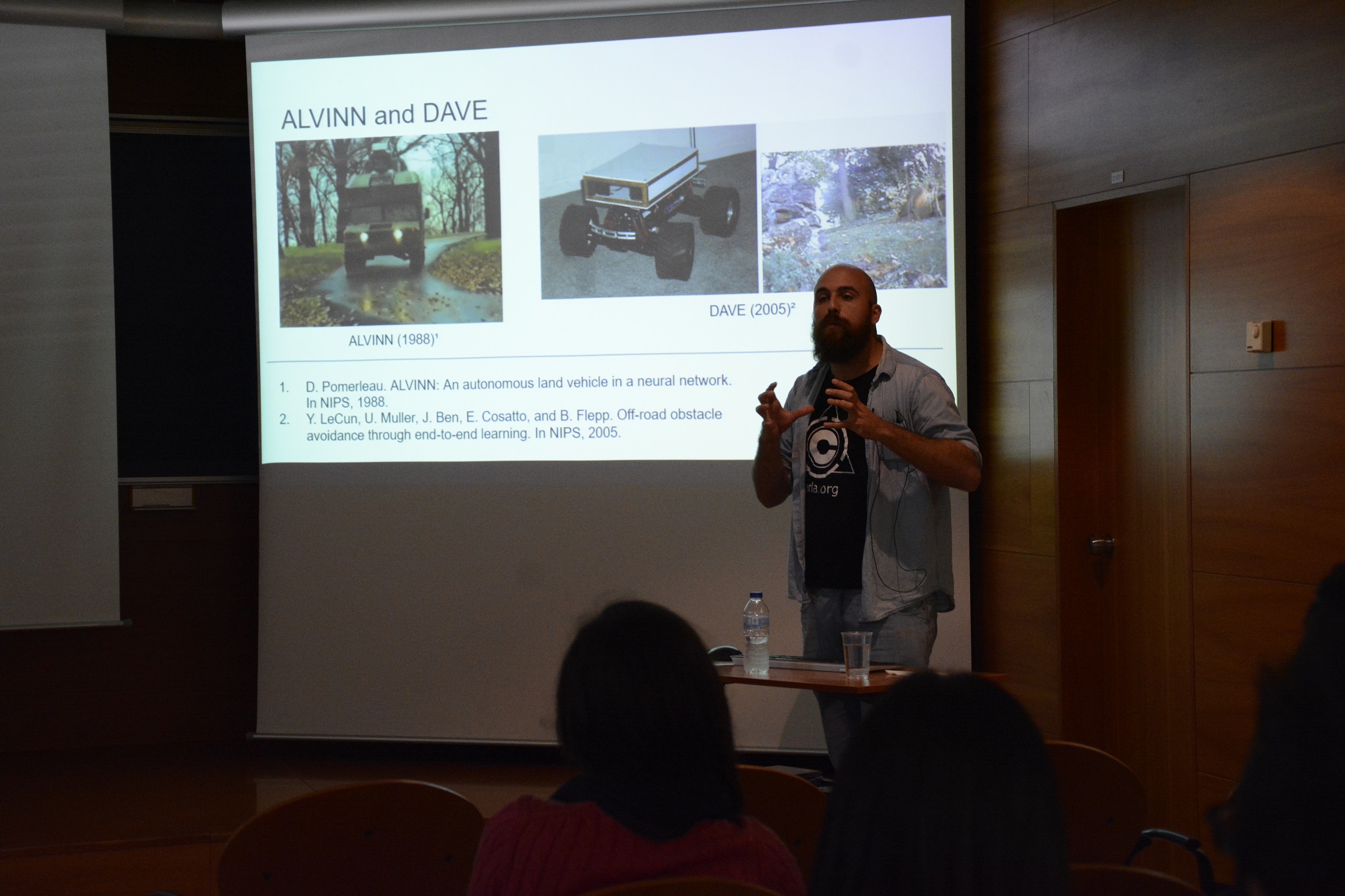

Autonomous vehicles are now considered as an assured asset in the future. Literally, all the relevant car-markers are now in a race to produce fully autonomous vehicles. These car-makers usually make use of modular pipelines for designing autonomous vehicles. This strategy decomposes the problem in a variety of tasks such as object detection and recognition, semantic and instance segmentation, depth estimation, SLAM and place recognition, as well as planning and control. Each module requires a separate set of expert algorithms, which are costly specially in the amount of human labor and necessity of data labelling. An alternative, that recently has driven considerable interest, is the end-to-end driving. In the end-to-end driving paradigm, perception and control are learned simultaneously using a deep network. These sensorimotor models are typically obtained by imitation learning from human demonstrations. The main advantage is that this approach can directly learn from large fleets of human-driven vehicles without requiring a fixed ontology and extensive amounts of labeling. However, scaling end-to-end driving methods to behaviors more complex than simple lane keeping or lead vehicle following remains an open problem. On this thesis, in order to achieve more complex behaviours, we address some issues when creating end-to-end driving system through imitation learning. The first of them is a necessity of an environment for algorithm evaluation and collection of driving demonstrations. On this matter, we participated on the creation of the CARLA simulator, an open source platform built from ground up for autonomous driving validation and prototyping. Since the end-to-end approach is purely reactive, there is also the necessity to provide an interface with a global planning system. With this, we propose the conditional imitation learning that conditions the actions produced into some high level command. Evaluation is also a concern and is commonly performed by comparing the end-to-end network output to some pre-collected driving dataset. We show that this is surprisingly weakly correlated to the actual driving and propose strategies on how to better acquire data and a better comparison strategy. Finally, we confirm well-known generalization issues (due to dataset bias and overfitting), new ones (due to dynamic objects and the lack of a causal model), and training instability; problems requiring further research before end-to-end driving through imitation can scale to real-world driving.

Keywords: autonomous driving, computer vision, machine learning, deep learning, imitation learning, simulation.