Tuesday, September 16

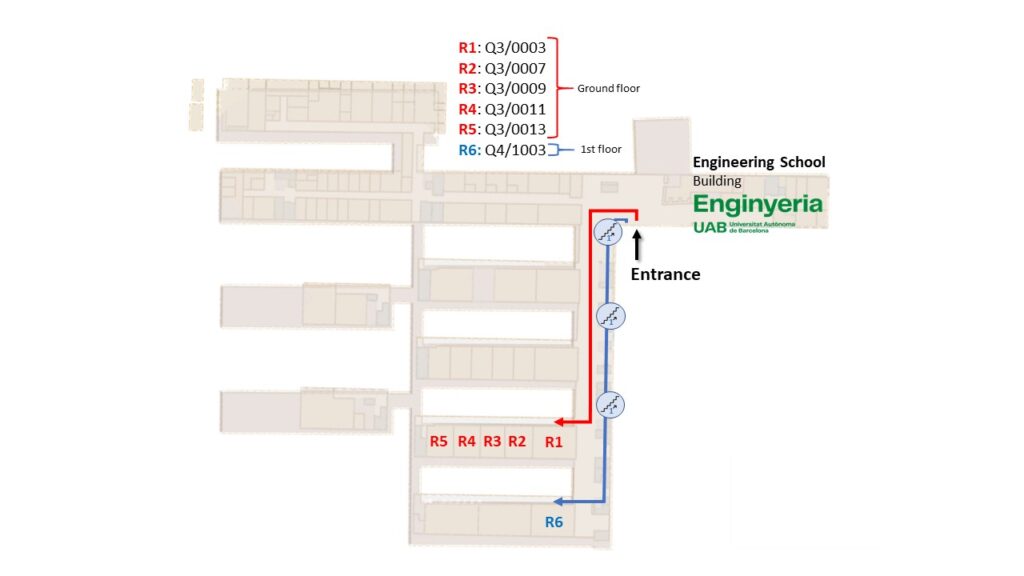

Track 1: 3D & OTHERS | Room Q4/1003

Track 1: 3D & OTHERS | Room Q4/1003

Track 2: ADAS & OTHERS | Q3/0009

Track 2: ADAS & OTHERS | Q3/0009

Track 3: APPLICATIONS | Q3/0011

Track 3: APPLICATIONS | Q3/0011

Track 4: GENERATION | Q3/0013

Track 4: GENERATION | Q3/0013

Track 5: HUMAN | Q3/0003

Track 5: HUMAN | Q3/0003

Track 6: MEDICAL DRIVING | Q3/0007

Track 6: MEDICAL DRIVING | Q3/0007

Track 7: MISCELANEOUS | Q3/0013

Track 7: MISCELANEOUS | Q3/0013

Escola Enginyeria, Edifici Q, UAB

14:30 – 15:15

Advisor/s: Gloria Haro (UPF)

Abstract:

This study presents a method for recovering the 3D geometry of a dynamic scene captured by a single camera, featuring one moving object. Since VGGT cannot handle moving objects, we adapt it for this task by proposing a pipeline that leverages SAM2 masking to extract the moving objects, combined with VGGT’s ability to infer occluded geometry. We propose a depth rescaling step in the pipeline to aggregate the geometry information from all frames. Results demonstrate the effectiveness of VGGT in static, single-frame scenarios, while the proposed method successfully places the moving object in 3D space. Unlike optimization-based approaches, this feed-forward, non-iterative method is time-efficient, lightweight, and accessible, enabling 3D scene reconstruction from smartphone videos for a wider audience.

Committee:

President: Fernando Vilariño (UAB)

Secretary: Josep R. Casas (UPC)

Vocal: Hunor Laczko (UB)

Advisor/s: Josep R. Casas (UPC)

Abstract: Autonomous vehicles require robust environmental perception. LiDAR is a key modality, yet retro reflective materials and elements (e.g., traffic signs and license plates) can saturate receivers and generate structured artifacts that propagate to downstream modules. Disabling or reducing the emission power when retroreflectors are detected is impractical in urban scenes densely populated with reflective elements as it would create blind areas and unstable coverage. In addition, when multiple LiDAR units operate on the same platform or in proximity, mutual illumination and unsynchronized pulse trains introduce cross-talk contamination that further confounds detection. This thesis addresses the problem of mitigating retroreflector-induced artifacts in solid-state LiDAR point clouds while preserving genuine scene geometry…

Committee:

President: Joost van de Weijer (UAB)

Secretary: Gabriel Villalonga (CVC/UAB)

Vocal: Gloria Haro (UPF)

Advisor/s: Josep R. Casas (UPC), Santiago Royo (UPC)

Abstract: The deployment of LiDAR-based perception systems in autonomous mobility and industrial automation demands high-fidelity

datasets that capture realistic sensor behavior under diverse conditions. This work presents an enhanced digital twin of a MEMS-

based solid-state LiDAR implemented in NVIDIA Isaac Sim, incorporating advanced radiometric modeling to bridge the domain

gap between synthetic and real point-cloud data. By integrating Monte Carlo beam-divergence sampling and a Cook–Torrance

BRDF framework, our model accurately reproduces underfilled and overfilled beam regimes, incidence-angle dependencies, and

material-specific reflectance effects. Validation experiments demonstrate representative intensity decay in distance sweeps, cosine-

law angular falloff on Lambertian surfaces, and shaded angular responses across multiple material reflectances. A beam-divergence

study further reveals under-, overfilled regions and multi-target return phases, behaviors absent in Isaac Sim’s baseline model.

These contributions significantly improve synthetic LiDAR fidelity, enabling more reliable training and validation of perception

algorithms for safety-critical applications.

Committee:

President: Ismael Benito-Altamirano (UOC)

Secretary: Ramon Baldrich Caselles (UAB)

Vocal: Cesar Diaz (OMASHU S.L.)

15:15 – 16:00

Advisor/s: Sergio Escalera Guerrero (UB)

Abstract: The creation of realistic textures for 3D assets remains a central challenge in computer vision and graphics, as manual texturing is costly and time-intensive. This thesis proposes a modular pipeline that leverages generative AI to automate the process of 3D texture generation from an untextured mesh and a single reference image. The pipeline consists of three stages: (1) an image captioning module, comparing Gemma and Qwen models, to generate detailed fabric descriptions; (2) image synthesis with Stable Diffusion and FLUX, conditioned by Depth ControlNet, to render 2D textured views aligned with the 3D geometry; and (3) texture transfer using Step1X-3D, MaterialMVP, and UniTEX to project the generated images onto the mesh. To enable systematic evaluation, two datasets were created: one comprising 120 untextured 3D garment models across six categories, and another with 120 corresponding reference texture images. Each stage of the pipeline was analyzed through comparative experiments, focusing respectively on captioning accuracy, image–geometry consistency, and 3D texture generation performance. The final textured models were further evaluated along three key criteria: overall visual quality, adherence to the reference, and texture realism without baked-in shadows. This study provides a valuable evaluation framework and detailed analysis of the strengths and weaknesses of current state-of-the-art methods, highlighting their complementarity and trade-offs. By combining reproducible experimentation with critical assessment, the work contributes both a functional pipeline for automated 3D texturing and a reference for future research in scalable dataset creation and generative 3D content.

Committee:

President: Felipe Lumbreras (UAB)

Secretary: Montse Pardàs (UPC)

Vocal: Maria Vanrell Martorell (UAB)

Advisor/s: Montse Pardàs (UPC)

Abstract: This thesis investigates the estimation of truck speed from video recordings obtained with a fixed surveillance camera placed

perpendicular to the road. The camera setup is based on the EINAR2 system from ARH and positioned close to the passing vehicles.

A dataset of 50 truck videos with different speed, lightning and background conditions was collected. Several computational

methods for motion estimation were explored and compared. These include frame displacement estimation using SSIM-based

alignment, block matching with stable motion filtering, and optical flow approaches such as Farneback, FlowNet2, LiteFlowNet2,

and PWC-Net. The motion vectors were processed to obtain speed profiles in km/h, considering the geometric constraints of

the scene. The performance of each method was analyzed in terms of accuracy, robustness and computational efficiency. The

results provide insights on the advantages and limitations of classical and deep learning-based approaches for video-based speed

measurement. This work contributes to the development of reliable computer vision techniques for speed estimation in real-world

conditions.

Committee:

President: Coloma Ballester (UPF)

Secretary: Josep R. Casas (UPC)

Vocal: Santiago Royo (UPC)

Advisor/s: Ramon Morros (UPC)

Abstract:Accurate estimation of fruit size is essential in precision agriculture for yield prediction and quality assessment. This work presents a multitask learning (MTL) approach, MTL-YOLOX, designed to detect fruits and calculate their diameter jointly within a unified framework. Several datasets were used, including images with calibration references, synthetic datasets generated by removing the references with segmentation and filling, and a new dataset of real images without reference. The experimental results show that the proposed architecture achieves stable performance in detection and regression across all datasets. The quantitative results show that the calibration reference approach achieved very high accuracy (mAP@05 = 0.97, R² = 0.98, MAE = 1.4 mm). The proposed MTL-YOLOX model achieved comparable performance on images with reference (mAP@0.5 = 0.97, R² = 0.99, MAE = 1.5 mm), while maintaining high detection accuracy. On real datasets without calibration reference, detection remained robust (mAP@0.5 = 0.94), with diameter estimation achieving MAE = 3.6 mm and R² = 0.93, demonstrating the model’s ability to generalize without explicit calibration references. A case study on starch level estimation further demonstrates its potential for generalization to other agronomic regression tasks.

Committee:

President: Robert Benavente (UAB)

Secretary: Albert Clapés Sintes (UB)

Vocal: Carles Ventura Royo (UOC)

Advisor/s: Javier Vazquez Corral (UAB), Ramon Baldrich Caselles (UAB)

Abstract: In recent years, image relighting has emerged as a challenging task in computer vision, with applications in augmented reality, film production, photographic editing, and related fields. This thesis investigates the role of high-level scene information, such as depth and surface normals, in single-image relighting, exploring several strategies for their integration. To this end, we evaluate two main architectures: convolutional neural networks, which have traditionally been the dominant approach, and transformer-based models, which are increasingly demonstrating strong potential. Comparisons on both synthetic and real datasets show that transformers consistently outperform CNNs, while the naive incorporation of geometric cues offers only limited improvements, underscoring the need for more effective integration methods.

Committee:

President: Ismael Benito-Altamirano (UOC)

Secretary: Joost van de Weijer (UAB)

Vocal: Hunor Laczko (UB)

Advisor/s: Albert Clapés Sintes (UB)

Abstract: Video understanding is a key field in computer vision that seeks to extract meaningful spatiotemporal information from video streams. Unlike static image analysis, it requires modeling temporal dependencies and dynamic interactions across frames. Within this domain, action anticipation stands out as a particularly challenging task, as it goes beyond recognizing past or ongoing events and instead focuses on inferring what and when actions will occur in the future. Such predictive capabilities are especially valuable in dynamic contexts such as surveillance, autonomous driving, or human–robot interaction.

In recent years, the intersection between video understanding and sports analytics has received increasing attention. Large-scale datasets such as SoccerNet have enabled progress in tasks like tracking, event detection, and action spotting. However, action anticipation has received comparatively little attention in sports, despite its potential to provide strategic insights and enhance real-time decision-making. The unpredictability and fast dynamics of human behavior in sports make anticipation especially difficult. Unlike more scripted domains (e.g., cooking or instructional videos), which have been bradly studied, sports are characterized by open-ended interactions among multiple agents, evolving strategies, and constantly changing external conditions.

This work addresses action anticipation in basketball, focusing on predicting which team will secure possession of the ball after a missed shot. Rebounding is one of the most complex actions to anticipate, as it involves multiple players contesting the ball in tight spaces, frequent occlusions, and subtle factors such as timing, positioning, and momentum. Moreover, the irregularity of ball bounces introduces further uncertainty, making prediction even more challenging. In particular, two experimental setups are explored: Offline Rebound Anticipation, where video clips are truncated exactly t seconds before the rebound, and the model must predict the upcoming outcome; and Online Rebound Anticipation, where the exact timestamp of the event is unknown and the model must decide whether a rebound will occur within a given anticipation window. In addition, two auxiliary tasks—action classification and action spotting—are considered to complement the main objective.

The main contributions of this work include the creation of a self-curated dataset for various video understanding tasks in the domain of basketball, comprising 100,000 videos and over 300 hours of footage, along with more than 2,000 manually annotated events. Additionally, the application of action anticipation techniques to rebounds, a particularly challenging action to predict due to the number of players involved and, to the best of the author’s knowledge, one that has not been previously addressed using deep learning. Finally, baseline results are presented on this new benchmark using two anticipation models, including one representing the current state of the art in sports video understanding.

Committee:

President: David Masip (Universitat Oberta de Catalunya)

Secretary: Gloria Haro (UPF)

Vocal: Jordi Gonzàlez (CVC-UAB)

Advisor/s: Carles Ventura Royo (UOC)

Abstract: This thesis presents the development and evaluation of a deep-learning pipeline for the classification and segmentation of oral lesions using clinical imaging data. The study addresses the need for more precise decision-support tools in oral lesion detection and delineation. The dataset, provided by the Universidad Complutense de Madrid in collaboration with UNED, contains more than 5,000 labelled oral-cavity images and supports two levels of granularity: (i) healthy, benign, potentially malignant, and malignant; and (ii) 94 specific lesion types (e.g., Abrikossoff’s tumor, bullous disease, erythema, leukoedema, lipoma).

The pipeline is end-to-end and clinically grounded: it enforces patient-level, leak-free splits; standardizes and harmonizes annotations; and applies clinically plausible augmentations to improve robustness without distorting anatomy. Severe class imbalance – especially at the fine-grained lesion level – is mitigated with balanced sampling and label aggregation when needed. The modelling stack remains architecture-agnostic so different segmentation architectures can be compared fairly, and the design emphasizes stability, generalization, and reproducibility. Model training and hyper-parameter optimization are conducted using advanced computational resources, including cloud-based GPUs provided by the AiWell Lab.

Evaluation uses both standard classification and segmentation metrics, with per-class and size-aware analyses, ablations over key choices (e.g., architecture, augmentation strength, and sampling balance), and qualitative reviews of typical successes and failure modes. Finally, study also discuss limitations (long-tail labels, acquisition variability) and outline future directions and prospective clinical validation.

Committee:

President: Joan M Núñez do Rio (Universitat Oberta de Catalunya)

Secretary: Carles Sanchez Ramos (CVC/UAB)

Vocal: Carlos Martín Isla (UB)

16:00 – 16:45

Advisor/s: Antonio Agudo (UPF)

Abstract: Endoscopy is an essential procedure in medical imaging, routinely applied for diagnostic, prognostic and therapeutic purposes. Developing robust methods for 3D reconstruction of endoscopic videos has the potential to improve the visualization of complex anatomies, increase diagnostic accuracy, and guide surgical procedures. Despite recent advancements the task remains highly challenging. The deformable nature of soft tissues makes classical computer-vision algorithms useless, and additional difficulties arise from the widespread use of monocular cameras, unknown camera parameters, occlusions, illumination changes, motion blur and other artifacts.

In this work, we present Endo-4DTS, a self-supervised pipeline based on Triangle Splatting for 3D scene synthesis of deformable endoscopy scenes from monocular videos with a static camera. We are, to the best of our knowledge, the first to propose the application of Triangle Splatting to endoscopic scenes, and the first to extend Triangle Splatting to deformable scenes. Our approach represents the endoscopic environment with a canonical set of triangles, optimized jointly with a deformation network that captures the rotation, translation, scale and color changes of the canonical triangles to the observation space. To handle the complexities of endoscopic deformable scenes, we incorporate a set of additional losses: a depth loss and depth smoothness loss applied to the canonical triangles using an depth map estimated with a pre-trained state-of-the-art model for monocular depth estimation, a normal consistency loss applied to both the canonical and deformed surfaces, and an $l_2$ loss on the rotation, translation and scale parameters predicted by the deformation network to encourage stable and physically plausible transformations.

By jointly optimizing these objectives, Endo-4DTS enables 3D synthesis of deforming tissues in challenging endoscopic scenes. We evaluate our approach on multiple endoscopic videos with time-varying tissues and illumination, showing that Triangle Splatting can be effectively extended to deformable endoscopic scenes and provides a useful representation for 3D scene modeling.

Committee:

President: Montse Pardàs (UPC)

Secretary: Debora Gil (UAB)

Vocal: Oriol Ramos (UAB)

Advisor/s: Josep R. Casas (UPC)

Abstract: Cooperative perception (CP) has emerged as a promising approach in autonomous driving, enabling connected autonomous vehicles (CAVs) to overcome occlusions and sensor limitations by sharing sensor data, thereby enhancing environmental awareness and road safety. Achieving robust and highly-reliable CP performance requires training models on diverse traffic scenarios, covering rare and challenging traffic dynamics. However, large-scale real-world datasets remain limited in CP due to the high costs of multi-agent deployments and labour-intensive labelling. While synthetic datasets offer a practical alternative, providing scalable data generation and accurate annotations, models trained solely on virtual data often struggle to generalize to real-world scenarios due to the domain gap. In this work, we explore the utility of synthetic data in training processes to enhance real-world performance, thereby reducing the effects of the domain discrepancies. We benchmark two state-of-the-art intermediate-fusion 3D LiDAR-based object detectors on synthetic and real-world datasets, under vehicle-to-vehicle (V2V) communication settings. Three training strategies are compared: training from scratch, synthetic-to-real transfer, and mixed-dataset training with varying synthetic–real ratios. Our results reveal that pre-training on synthetic data before fine-tuning on real datasets delivers the strongest real-world gains (+2% AP@0.5, +2–4% AP@0.7 on car detection), while mixed training enhances cross-domain generalization, especially with larger synthetic contributions. These findings highlight that virtual data, rather than merely replacing real data, can serve as an effective complementary source in developing reliable CP systems.

Committee:

President: Ramon Morros (UPC)

Secretary: Santiago Royo (UPC)

Vocal: Ramon Baldrich Caselles (UAB)

Advisor/s: Jordi Sanchez Riera (IRI)

Abstract: The rise of e-Sports, particularly League of Legends, has produced vast amounts of gameplay footage, creating a demand for efficient tactical analysis and in-game coaching. This thesis presents a multimodal AI system for automatic tactical coaching and strategic assistance, integrating a fine-tuned YOLOv8 model for champion detection with large language models to generate context-aware explanations of teamfight events. Additionally, the system employs graph-based features and spectral embedding techniques to cluster teamfights and predict their outcomes. Experiments demonstrate that this approach accurately identifies key tactical situations, provides meaningful explanations of in-game actions, and predicts fight outcomes effectively. The work contributes a novel dataset, vision-language model fine-tuning strategies, and a unified pipeline for semantic coaching in complex MOBA gameplay.

Committee:

President: Jorge Bernal (UAB)

Secretary: Adam Phillips (UPF)

Vocal: Gerard De Mas Giménez (UPC)

Advisor/s: Sergio Escalera Guerrero (UB)

Abstract: Virtual Try-Off (VTOFF) – the inverse problem of Virtual Try-On (VTON) – aims to reconstruct the canonical garment from its worn version in the person image. The task appears to be an ill-posed problem due to the one-to-many mapping nature. Its challenges include the need to reconstruct unseen details due to occlusion, the need to infer original cloth shape under deformations, and the need to transfer exact visible fine-grained details such as textures and logos. Because of these different difficulties, adapting VTON methods to VTOFF is far from straightforward. In this work, we present a systematic study of diffusion-based approaches for VTOFF, taking the common VTON architecture – Dual-UNet Diffusion Model- as the starting point. Our experiments cover three axes of design: (i) generation backbone, comparing Stable Diffusion 1.5 vs Stable Diffusion XL, and base vs inpainting variants; (ii) conditioning inputs, including ablations on different mask designs, masked/unmasked inputs for IP-Adapter, and text prompt augmentation; (iii) losses and training strategies, with the Leffa attention module, curriculum schedules, and the impact of perceptual objectives. Extensive experiments on the VITON-HD dataset reveal trade-offs across various options. Our framework achieves state-of-the-art performance on the primary metric DISTS and other relevant metrics such as LPIPS, FID, KID and SSIM, providing both strong baselines and insights for future Virtual Try-Off research.

Committee:

President: Pablo Arias (UPF)

Secretary: Felipe Lumbreras (UAB)

Vocal: Jordi Gonzàlez (CVC-UAB)

Advisor/s: Fernando Vilariño (UAB)

Abstract: Implicit neural representations (INRs) have proven effective for continuous signal encoding, yet classical networks exhibit a spectral bias that hampers their ability to capture high-frequency details. Quantum Implicit Representation Networks (QIREN) address this limitation by leveraging parameterized quantum circuits with inherent Fourier structures, enabling more compact and expressive frequency modeling than their classical counterparts. This thesis introduces Quantum Neural Radiance Fields (Q-NeRF), a hybrid framework that integrates QIREN modules into the state-of-the-art Nerfacto pipeline for 3D scene reconstruction and novel view synthesis. The proposed architecture preserves Nerfacto’s efficient sampling, pose refinement, and hash encoding strategies while replacing selected density and radiance prediction components with quantum-enhanced modules. To evaluate its feasibility, we benchmark Q-NeRF against classical baselines on standard indoor multi-view datasets using quantitative (PSNR, SSIM, LPIPS) and qualitative comparisons. Our results show that hybrid quantum-classical models can achieve competitive reconstruction quality under severe computational constraints, with quantum modules particularly well-suited for capturing fine-scale, view-dependent details. Although current experiments rely on simulators limited to small qubit counts, they demonstrate the potential of quantum encodings for addressing spectral bias in implicit representations. Overall, this work provides one of the first systematic studies of quantum-classical neural radiance fields, offering insights into their advantages, limitations, and pathways toward scalable quantum-enabled 3D reconstruction

Committee:

President: Meysam Madadi (UB)

Secretary: Coloma Ballester (UPF)

Vocal: Hunor Laczko (UB)

16:45 – 17:30

Advisor/s: Gabriel Villalonga (CVC/UAB)

Abstract: Transformers have rapidly gained prominence in computer vision since Google’s introduction of the Vision Transformer (ViT) in 2020. ViT adapts the attention mechanism from natural language processing to image data, with tokens serving as the fundamental representation. Subsequent research has explored numerous extensions and modifications to enhance performance and efficiency. This study investigates tokenization strategies and pruning techniques, examining their individual and combined effects on token behavior and attention. The proposed approaches are evaluated on two benchmark datasets: ImageNet and CIFAR-100.

Committee:

President: Coloma Ballester (UPF)

Secretary: Albert Clapés Sintes (UB)

Vocal: Carles Sanchez Ramos (CVC/UAB)

Track 3 – Q3/0011 Marco Terral | WildSVG: Toward Reliable SVG Generation under Real-World Conditions

Advisor/s: David Vázquez (UAB), Maria Vanrell Martorell (UAB)

Abstract: StarVector is a multimodal LLM designed to generate SVGs from either rendered images of SVGs or textual descriptions. Through supervised fine-tuning and reinforcement learning, it achieves strong results on tasks involving clean SVG-based input. However, it struggles with real-life images, which introduce noise and visual clutter that degrade performance. This project aims to extend StarVector’s capabilities to real-world scenarios by presenting a benchamerdeveloping a dedicated benchmark, datasets and exploring architectural and training adaptations to improve robustness. The goal is to enable reliable vision-to-SVG translation in complex, natural scenes.

We introduce SVG extraction, the task of translating visual inputs into structured vector graphics. While existing multimodal models such as StarVector achieve strong results when generating SVGs from clean renderings or textual descriptions, they struggle in real-world scenarios where natural images introduce noise, clutter, and domain shifts. To bridge this gap, we extend StarVector’s capabilities toward robust vision-to-SVG translation in the wild.

As a foundation, we contribute two new datasets: Natural Wild SVG, composed of complex real-world images paired with SVG annotations, and Synthetic Wild SVG, which integrates complex and elaborate SVG designs into real-life scenarios, providing challenging inputs that bridge between synthetic and natural settings. Using these resources, we establish the first comprehensive benchmark for SVG extraction, systematically evaluating StarVector and related models under both synthetic and natural settings.

Committee:

President: Josep R. Casas (UPC)

Secretary: Diego Porres Bustamante (CVC/UAB)

Vocal: Ismael Benito-Altamirano (UOC)

Advisor/s: Antonio Agudo (UPF)

Abstract: Fine-grained action recognition remains difficult in sports due to rapid motion, occlusion, unconventional body configurations, and subtle distinctions between similar movements. We argue the main bottleneck is before classification—detection and pose estimation—and study how strengthening these stages affects end-to-end accuracy. Using FineGym benchmark as a representative setting, we conduct early experiments across multiple detectors and pose estimators to identify robust upstream candidates. Guided by this analysis, we adopt a prompted, segmentation-based localization strategy and a transformer pose estimator as core components, with Mask2Former as an automatic segmentation baseline. For downstream evaluation, we use PoseConv3D as a diagnostic probe rather than a contribution, quantifying sensitivity to input quality; note that reported PoseConv3D results rely on ground-truth boxes, and prior FineGym work highlights detectors’ difficulty with fast motion which evidences that the upstream tasks are the principal failure point. We assemble a practical pipeline that (i) initializes and propagates instance segmentation for consistent localization, (ii) extracts 2D keypoints, and (iii) classifies actions via 3D heatmap volumes, with lightweight post-processing to correct masks. To assess generalization, we construct a balanced test split to probe sensitivity to input quality and domain variation. Strengthening upstream stages yields strong downstream gains and provides a template for robust fine-grained recognition in other high-dynamics domains.

Committee:

President: Meysam Madadi (UB)

Secretary: Ramon Morros (UPC)

Vocal: Marcel Granero Moya (UPF)

Advisor/s: Carlos Martín Isla (UB)

Abstract: Fetal ultrasound is an important part of prenatal care, but in many low-resource settings its use is limited by the shortage of trained specialists and high end equipment. Blind sweep protocols offer a way forward by allowing minimally trained staff to capture standardized video scans, but automated analysis is needed to unlock their full potential. In this work, we adapt existing models to the task of blind sweep analysis and build an interactive system that combines semi-automatic ultrasound video processing with a language model assistant. We first test how well current methods generalize on blind sweeps, then finetune a chatbot assistant for interactive use, and finally measure the runtime and memory usage of the full pipeline on to check if it can run on affordable devices. Our results show that the system can provide accurate measurements while staying lightweight enough for use with limited resources. By bringing computer vision.

Committee:

President: Montse Pardàs (UPC)

Secretary: Jorge Bernal (UAB)

Vocal: Cesar Diaz (OMASHU S.L.)

Advisor/s: Jordi Gonzàlez (CVC-UAB)

Abstract: Large language models (LLMs) excel in many tasks but can produce undesirable outputs such as toxic or factually incorrect content. We introduce Dynamically Scaled Activation Steering (DSAS), a novel framework for context-aware behavior modulation in LLMs. DSAS operates at inference time by adjusting hidden activations only when inputs exhibit problematic attributes (e.g. hate speech), and otherwise leaves the model unchanged. This selective intervention preserves the model’s original fluency and reasoning on benign inputs while steering responses to avoid toxic outputs when needed. In experiments, DSAS significantly improves the alignment–capability trade-off: it reduces toxic content in generated text without significant loss of coherence or accuracy. Applied on top of existing activation editing methods, DSAS consistently outperforms their always-on counterparts, underlining the value of conditional, input-triggered steering. These results highlight DSAS as an efficient and interpretable approach to fine-grained model alignment that enhances safety without retraining or degrading overall performance.

Committee:

President: Fernando Vilariño (UAB)

Secretary: Lluís Gómez Bigorda (UAB)

Vocal: Antonio Agudo (UPF)