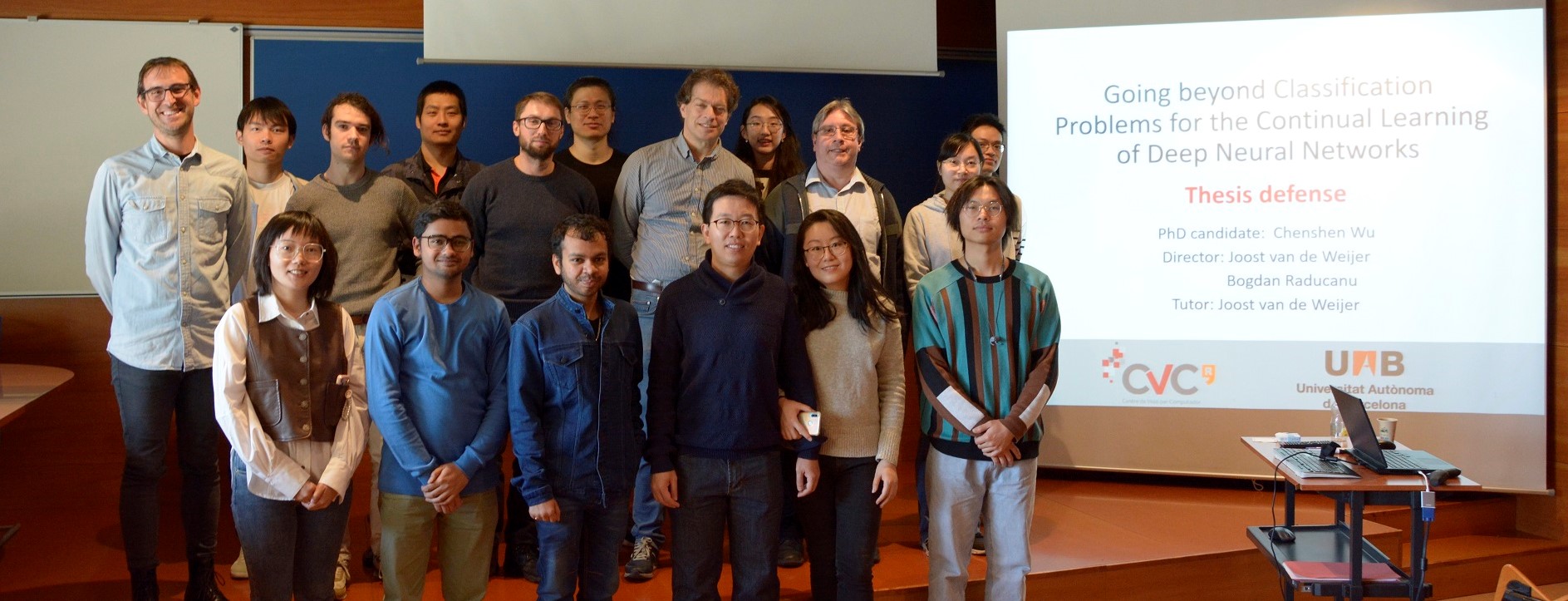

CVC has a new PhD on its record!

Chenshen Wu successfully defended his dissertation on Computer Science on March 16, 2023, and he is now Doctor of Philosophy by the Universitat Autònoma de Barcelona.

What is the thesis about?

Deep learning has made tremendous progress in the last decade due to the explosion of training data and computational power. Through end-to-end training on a large dataset, image representations are more discriminative than the previously used hand-crafted features. However, for many real-world applications, training and testing on a single dataset is not realistic, as the test distribution may change over time. Continuous learning takes this situation into account, where the learner must adapt to a sequence of tasks, each with a different distribution. If you would naively continue training the model with a new task, the performance of the model would drop dramatically for the previously learned data. This phenomenon is known as catastrophic forgetting.

Many approaches have been proposed to address this problem, which can be divided into three main categories: regularization-based approaches, rehearsal-based approaches, and parameter isolation-based approaches. However, most of the existing works focus on image classification tasks and many other computer vision tasks have not been well-explored in the continual learning setting. Therefore, in this thesis, we study continual learning for image generation, object re-identification, and object counting.

For the image generation problem, since the model can generate images from the previously learned task, it is free to apply rehearsal without any limitation. We developed two methods based on generative replay. The first one uses the generated image for joint training together with the new data. The second one is based on output pixel-wise alignment. We extensively evaluate these methods on several benchmarks.

Next, we study continual learning for object Re-Identification (ReID). Although most state-of-the-art methods of ReID and continual ReID use softmax-triplet loss, we found that it is better to solve the ReID problem from a meta-learning perspective because continual learning of reID can benefit a lot from the generalization of meta learning. We also propose a distillation loss and found that the removal of the positive pairs before the distillation loss is critical.

Finally, we study continual learning for the counting problem. We study the mainstream method based on density maps and propose a new approach for density map distillation. We found that fixing the counter head is crucial for the continual learning of object counting. To further improve results, we propose an adaptor to adapt the changing feature extractor for the fixed counter head. Extensive evaluation shows that this results in improved continual learning performance.

Keywords: continual learning, generative adversarial model, object re-identification, counting.