CVC Seminar

Abstract:

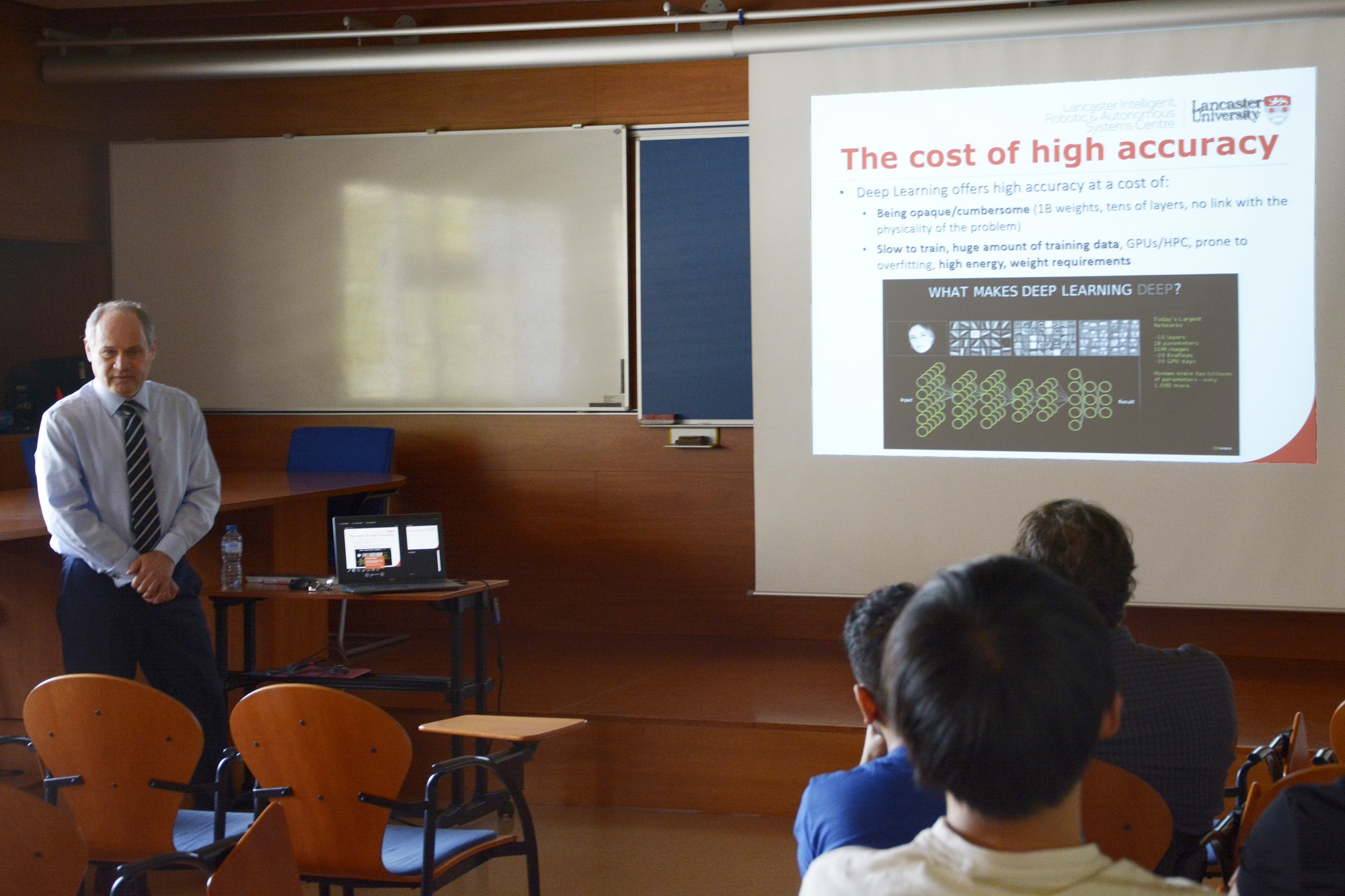

Deep Learning justifiably attracted the attention and interest of the scientific community and industry as well as of the wider society and even policy makers. However, the predominant architectures (from Convolutional Neural Networks to Transformers) are hyper-parametric models with weights/parameters being detached from the physical meaning of the object of modelling. They are, essentially, embedded functions of functions which do provide the power of deep learning; however, they are also the main reason of diminished transparency and difficulties in explaining and interpreting the decisions made by deep neural network classifiers. Some dub this “black box” approach. This makes problematic the use of such algorithms in high stake complex problems such as aviation, health, bailing from jail, etc. where the clear rationale for a particular decision is very important and the errors are very costly. This motivated researchers and regulators to focus efforts on the quest for “explainable” yet highly efficient models. Most of the solutions proposed in this direction so far are, however, post-hoc and only partially addressing the problem. At the same time, it is remarkable that humans learn in a principally different manner (by examples, using similarities) and not by fitting (hyper-) parametric models, and can easily perform the so-called “zero-shot learning”. Current deep learning is focused primarily on accuracy and overlooks explainability, the semantic meaning of the internal model representation, reasoning and decision making, and its link with the specific problem domain. Once trained, such models are inflexible to new knowledge. They cannot dynamically evolve their internal structure to start recognising new classes. They are good only for what they were originally trained for. The empirical results achieved by these types of methods according to Terry Sejnowski “should not be possible according to sample complexity in statistics and nonconvex optimization theory” [1]. The challenge is to bring together the high levels of accuracy with the semantically meaningful and theoretically sound and provable solutions.

All these challenges and identified gaps require a dramatic paradigm shift and a radical new approach. In this talk, the speaker will present such a new approach towards the next generation of explainable-by-design deep learning [2]-[5]. It is based on prototypes and uses kernel-like functions making it interpretable-by-design. It is dramatically easier to train and adapt without the need for complete re-training, can start learning from few training data samples, explore the data space, detect and learn from unseen data patterns [6]. Indeed, the ability to detect the unseen and unexpected and start learning this new class/es in real time with no or very little supervision is critically important and is something that no currently existing classifier can offer. This method was applied to a range of applications including but not limited to remote sensing [7]-[8], autonomous driving [2],[6], health and others.

Short bio:

Prof. Angelov (MEng 1989, PhD 1993, DSc 2016) is a Fellow of the IEEE, of the IET and of ELLIS. He holds a Personal Chair in Intelligent Systems and is Director of Research at the School of Computing and Communications, Lancaster University, UK. Prof. Angelov is Governor of the International Neural Networks Society (INNS) being his Vice President for two terms till end of 2020. He is also Governor of the Systems, Man and Cybernetics Society of the IEEE for a second term and Program co-Director within ELLIS of Human-Centred Machine Learning program. Prof. Angelov has (co-)authored 380+ peer-reviewed publications in leading journals (e.g. TPAMI, Information Fusion, IEEE Transactions on Cybernetics), peer-reviewed conference proceedings (e.g. CVPR, IJCNN), 6 patents, 3 research monographs (by Wiley, 2012 and Springer, 2002 and 2019) cited over 13800+ times with an h-index of 62. He is the founding Director of LIRA (Lancaster Intelligent, Robotic and Autonomous systems) Research Centre which includes over 70 academics and researchers across 15 Departments from all Faculties of the University. He has an active research portfolio in the area of computational intelligence and machine learning and internationally recognised results into online and evolving learning and explainable AI. Prof. Angelov leads numerous projects (including several multimillion ones) funded by UK research councils, EU, industry, UK MoD. His research was recognised by 2020 Dennis Gabor Award “for outstanding contributions to engineering applications of neural networks” as well as ‘The Engineer Innovation and Technology 2008 Special Award’ and ‘For outstanding Services’ (2013) by IEEE and INNS. He is also the founding co-Editor-in-Chief of Springer’s journal on Evolving Systems and Associate Editor of several leading international scientific journals, including IEEE Transactions on Cybernetics, IEEE Transactions on AI as well as of several other journals. He gave over 30 key note talks and was IEEE Distinguished Lecturer, including talks in the Silicon Valley. Prof. Angelov was General co-Chair of a number of high profile conferences. He was also a member of International Program Committee of 100+ international conferences (primarily IEEE). More details

[1] T. J. Sejnowski, The unreasonable effectiveness of deep learning in AI, PNAS, 117 (48) 30033-30038, 28 Jan. 2020. [2] E. A. Soares, P. Angelov, Towards Explainable Deep Neural Networks (xDNN), Neural Networks, 130: 185-194, October 2020.

[3] P. P. Angelov, X. Gu, Toward anthropomorphic machine learning, IEEE Computer, 51(9):18–27, 2018.

[4] P. Angelov. X. Gu, Empirical Approach to Machine Learning, Springer, 2019, ISBN 978-3-030-02383-6.

[5] P. Angelov, X. Gu, J. Principe, A generalized methodology for data analysis, IEEE Transactions on Cybernetics, 48(10): 2981-2993, Oct 2018.

[6] E. A. Soares, P. Angelov, Detecting and Learning from Unknown by Extremely Weak Supervision: eXploratory Classifier (xClass), Neural Computing and Applications, 2021, also in https://arxiv.org/pdf/1911.00616.pdf

[7] X. Gu, P. P. Angelov, C. Zhang, P. M. Atkinson, A Semi-Supervised Deep Rule-Based Approach for Complex Satellite Sensor Image Analysis, IEEE Transactions on Pattern Analysis and Machine Intelligence, TPAMI, 44(5): 2281-2292, DOI: 10.1109/TPAMI.2020.3048268, May 2022.

[8] X. Gu, C. Zhang, Q. Shen, J. Han, P.P. Angelov, P.M. Atkinson, A Self-Training Hierarchical Prototype-based Ensemble Framework for Remote Sensing Scene Classification, Information Fusion, 80, 179-204, April 2022.

[9] Z. Jiang, H. Rahmani, P. Angelov, S. Black, B. Williams, Graph-context Attention Networks for Size-varied Deep Graph Matching, IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR 2022), 19-24 June 2022, New Orleans, USA, pp. 2343-2352.