Overview

One of the main pillars of the CVC’s mission is outreach. The CVC is committed to making its research accessible to all kinds of audiences, working to offer interesting content, scientific dissemination activities and citizen science programmes to bring Computer Vision and Artificial Intelligence closer to citizens.

The CVC’s outreach actions aim to increase the general knowledge of science and innovation within the society, promote research results at local, national and international levels and encourage scientific vocations among young people.

Since 2021, the CVC is recognized as a Unit of Scientific Culture and Innovation (UCC+i) by the Spanish Foundation for Science and Technology (FECYT) and is part of the Spanish UCC+i Network. UCC+i acts as an intermediary between research centers and citizens, with the main aim of promoting scientific, technological and innovation culture and knowledge through a range of activities.

If you are interested in receiving information about the outreach activities organized by the CVC, leave us your contact and we will e-mail you when we have news.

Outreach news & events

Social impact of AI projects

EPSP

EU-funded project to bridge the gap between science and society

Activities with Schools, High Schools & Universities

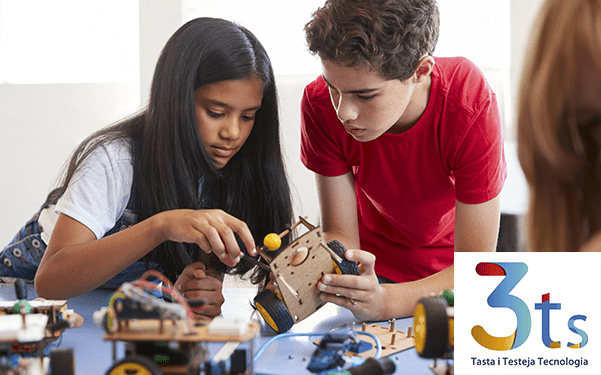

School & High School visits

During the year, the CVC receives several visits from schools and high schools

Research, Creation and Service Program

The RCS program fosters learning through organization-driven challenges.

Badamonia

A technological experiment in creativity and AI based on a co-creative proposal for the design of the Dimoni of Badalona.

CROMA 2.0 Program

Collaboration in the CROMA 2.0 programme of the Autònoma Solidària Foundation (FAS).

Programa ARGÓ

Workshop on AI for high school students as part of the UAB's ARGÓ program.

Outreach activities

Resources

CVC Rue

Interactive infographic to discover more about CVC projects and applications.

Virtual CVC

Take a virtual tour of CVC's premises and labs.

CVC Newsletter

All CVC news, activities and events gathered in one place